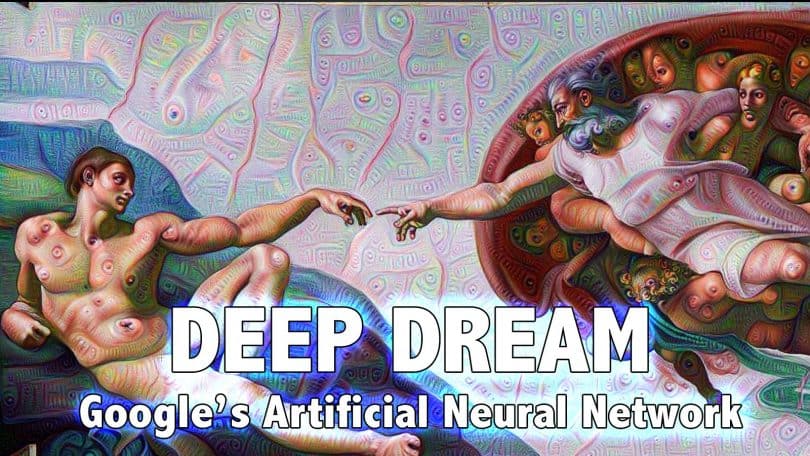

The bizarre images from Google’s neural networks of DeepDream go around the world. Originally, they should help researchers understand their own artificial intelligence.

Rarely have computers as beautifully erred as of the last in Google’s lab. In the middle of June, Google researchers had impressively demonstrated the fantastic images that artificial neural networks (DeepDream) produce when they get their hidden search patterns. The surreal works went around the world, whereupon Google published a code, with which these networks can also be simulated at home computer.

Since then, there has been a contest for the most spectacular photo- and even video-manipulations, on Twitter they are spread under the Hashtag #DeepDream. A little in the background is the question, what the Google researchers with their “dreamers” actually aimed. The answer is just as fascinating: They wanted to understand better how the artificial intelligence they themselves had created at all.

Inception is the network that created the magical worlds. Last year, Inception won the Large Scale Visual Recognition Challenge, where neural networks of DeepDream compete to classify most photos properly; Is it a face, a dog, a bird, a car, a landscape? In this sorting work, the network of Google “set a new standard”, as the creators of Inception proudly wrote in a specialist article.

“There is a kind of network for a long time,” says Aditya Khosla, a researcher at the Computer Science and Artificial Intelligence Laboratory of the Massachusetts Institute of Technology (MIT). Khosla has also developed a second network that Google researchers used in addition to Inception to create their DeepDream images. He knows exactly what neural networks can do. “Sure, there have been massive improvements to the categorization of objects over the last couple of years, but some of Google’s and Facebook’s, too.”

The concept DeepDream is that the neural networks were conceived by the neuroscientists Warren McCullogh and Walter Pitts of the University of Chicago in 1943. Instead of transistors, McCullogh and Pitts proposed as artificial units artificial nerve cells (neurons), which are connected to each other to form circuits. In contrast to transistors that calculate zeros and ones, artificial neurons only send a signal when the sum of their inputs exceeds a certain threshold value. They do not work with binary logic, like any modern computer, but with threshold logic. However, today’s artificial neural networks are simulated on computers, so the binary logic represents the threshold logic.

If you want to understand how Inception and similar networks work, look at the general structure of artificial neural networks within the DeepDream concept. Their architecture almost always follows the same principles: Hundreds or thousands of artificial neurons sit in superimposed layers and are connected by (simulated) lines. In the project DeepDream, a neuron can activate the neighbors in its own layer and cells of the layer above it via its lines. The uppermost or input layer functions as a sensor, which is fed with the data that the network is to sort. In the case of Inception are the images, but on other networks, this can also be noise. Each pixel activates precisely one neuron in the input layer. The lowest or output layer, on the other hand, usually has only one handful of neurons, one for each image category. These neurons indicate to which category a picture belongs which was presented to the input layer.

Training for the DeepDream network

But before such a network does this job well, it has to be trained. “In DeepDream with each image, an activity wave runs through the entire network, from the input layer to the output layer,” says Khosla. “If the output layer maps the image to the wrong category, it will get the message and send an error signal back through the network, which is called DeepDream supervised learning.” The error signal causes the lines between the neurons to adjust throughout the network to make the error less likely. By means of this troubleshooting, the artificial neurons are, so to speak, trained for certain image properties.

Google’s Inception is one of the so-called convolutions. In this type of network, the neurons of the second layer react to light-dark contrasts after a successful training in a certain orientation, ie to the straight edges of a house outline or windows. The next lower layer reacts to combinations of these edges, for example, house-like contours and so on. The deeper the layer in the DeepDream network, the more complex are the structures in the image, to which the neurons then react.

In DeepDream neural networks (Deep Neural Networks) such as Inception with its 22 layers, however, it is often not clear which form the neurons are entering in the deeper layers. This is the point where Google researchers can not accurately predict what their artificial intelligence is doing. In the neural network, all rules are defined and mathematically simple. However, each neuron performs a nonlinear function: it can react to small input changes with strong output changes. This is how the entire network behaves in a non-linear manner, and it does not predict in advance what is happening in the network during training.

“That is why many groups have developed methods to visualize the characteristics of the deep neurons,” says Khosla. They want to understand when and how misinterpretations are triggered. “A well-trained network of DeepDream shows an image and lets the activity wave run through the network, but instead of sending back an error signal, the cells are over-activated in that deep layer, The signal thus manipulated runs through the network back to the input layer. ” Thus, in the original image, those properties which the deepest layer have most strongly addressed are overstated.

So the Google programmers also create their “DeepDream” images, with a small modification: they feed the network over the oversized image with the visualized properties of the selected layer. The neurons in the layer thus get to see more of the forms on which they were already specialized in the overpainted image and override these properties once again in the concept – DeepDream. This process is repeated until the forms that the selected layer “recognizes” appear clearly on the original image.

Thus, the visualization tool of DeepDream made by Google from cloud birds and trees. If a layer in the network specializes in bird shapes, it reacts to everything in the cloud image which looks just the least after a bird. With each update of the image, the region in the original image, which looks like a bird, is slightly more to the bird. After many repetitions, something like a cockatoo comes from a cloud.

“This makes the pictures look spectacular in DeepDream,” says Khosla. “I would rather call this method a toy.” Although Google does very good work in the categorization of images, a great achievement is not the visualization.

Researchers have known these fantasies for some time

The method of activating DeepDream layers to override the favorite forms of the neurons hidden there was not invented by Google, but by a research group from Oxford and presented for the first time in 2013. “I think they have become so famous because it was Google, which she presented, and people outside my research area see something spectacular in the pictures, also because the name Inception refers to the Kinofilm of the same name, but it does not really impress anyone in the research community. ”

Concerns, the images of the network “DeepDream” indicated that machines open up to the human mind are also inappropriate. Although Professor Kunihiko Fukushima from Tokyo, when he proposed the convolution networks in the early 1980s, was inspired by the construction of the visual brain. But of thinking machines with consciousness and intention, networks like Inception are still as far away as in the days of Fukushima, “says Khota.