Introduction to Data Pipelines

In the vast landscape of modern data-driven enterprises, a force of complexity and wonder lies at the core – data pipelines. Data pipelines are the lifelines of modern data-driven ecosystems. They pave the way for businesses to navigate the vast sea of information. These intricate networks of automated processes wield the power to mold raw data into actionable insights. Picture them as artful architects, constructing a symphony of data flow. They are weaving together sources, transformations, and destinations with harmonious finesse.

Data Pipeline

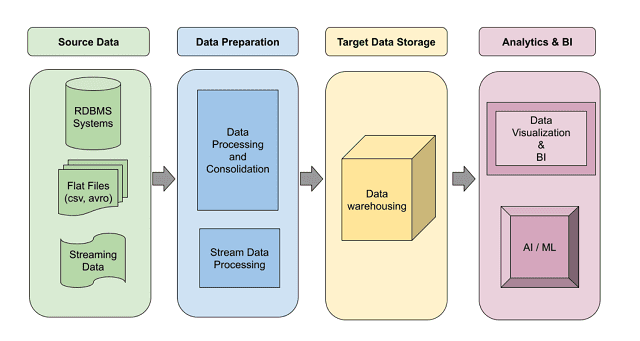

What precisely is a data pipeline? At its essence, it embodies an orchestrated sequence of guiding data from diverse sources like databases, APIs, logs, and streaming platforms, towards a final sanctuary – be it a data warehouse. Like a grand river of information, these pipelines gather streams of data. And they are nurturing them with care.

But the data’s journey doesn’t end there. Data, in its raw form, must transform. This transformation is akin to the metamorphosis of a caterpillar into a butterfly. This process is laden with cleansing, filtering, and shaping. That prepares the data for its destined role – providing valuable insights and knowledge.

Backstage Transformation

Beyond the backstage of transformation, data steps into the spotlight of processing. Here, statistical sorcery reigns supreme. It deals with algorithms and analytics casting spells of analysis upon the data. Patterns emerge, correlations form and hidden truths reveal themselves. And that is guiding businesses toward smarter decisions and strategic success.

Data Loading

As the data reaches its final crescendo, it’s time for the grand finale – data loading. The spotlight shifts towards storing this refined data in the waiting arms of databases, business intelligence tools, or applications. The data is ready for action and fuel reports, dashboards, and real-time applications. And that is empowering stakeholders with the intelligence they need to thrive.

Intriguing, isn’t it? The complexity and diversity of data pipelines make them the backbone of data-driven enterprises. They transform data streams into a captivating symphony of insights. They enrich the tapestry of businesses in a constantly evolving world. So, let’s embark on a journey to unravel the mysteries and masterpieces of data pipelines. And discover how they play an instrumental role in the symphony of data-driven success.

What is a Data Pipeline?

A data pipeline, a labyrinth of interconnected processes, forms the backbone of data-driven systems. It’s akin to a digital highway, facilitating the seamless flow of information from diverse sources to a common destination.

A data pipeline is a complex web of data ingestion, storage, transformation, processing, and loading stages. It gathers data from an array of sources – databases, APIs, logs, and streaming platforms. This data conglomerate then finds its abode in storage repositories like data lakes or data warehouses, eagerly waiting to be explored.

But the story doesn’t end here. The data, in its raw state, requires refinement. Data transformation emerges as the transformative force, purifying and shaping the data into a cohesive and usable form. Akin to an alchemist’s touch, this stage lays the foundation for actionable insights.

Now comes the climax – data processing. This is where the true magic happens. Statistical analysis, machine learning, and complex computations conspire to unveil patterns and correlations concealed within the data’s depths. The data metamorphoses into a beacon of intelligence, guiding businesses toward informed decisions and strategic triumphs.

And as the curtain falls, the grand finale – data loading – takes center stage. The refined data is located in databases, business intelligence tools, or applications. Finally, it is ready to be harnessed by stakeholders for reporting, analysis, and real-time applications.

In a world governed by data, these pipelines emerge as unsung heroes. And they are orchestrating the data’s symphony and ensuring it harmoniously resonates through the organization. Data pipelines are the unsung conductors. And they are the key to unlocking the potential of data. And they are transforming it from raw information to a symphony of actionable insights. Thus, these intricate pipelines remain indispensable for any organization striving to thrive in the data-driven era.

The Importance of Data Pipelines in Modern Businesses

In the dynamic realm of modern businesses, data reigns supreme. With the explosive growth of data sources and volumes, the need for efficient data management has never been more crucial. This is where data pipelines step into the limelight. They are becoming the cornerstone of success for data-driven organizations.

Data Pipeline Architects

Data pipelines are diligent architects. They construct a robust framework that handles data with finesse. In addition, they can traverse the data landscape. Besides, they can navigate the intricacies of data ingestion, transformation, and loading. They empower businesses to unleash the true potential of their data.

Benefits

One primary benefit of data pipelines is their capacity to streamline and automate the entire data flow. Gone are the days of manual data handling and integration. These pipelines come with their orchestrated processes. They ensure data moves harmoniously, ensuring consistency and reliability throughout the journey.

Challenges

Moreover, data pipelines tackle the challenge of data diversity head-on. The data originates from various sources. They are in different formats. In addition, they are at varying velocities. Besides, they ensure data harmony can be akin to conducting a symphony of chaos. However, data pipelines serve as the virtuoso conductors. And they are orchestrating the data’s symphony into a coherent ensemble.

Beyond mere organization, data pipelines hold the key to unlocking real-time insights. In the fast-paced business landscape, speed is of the essence. They can efficiently process and analyze data. Thereby the businesses can seize opportunities. And the business can respond to challenges promptly. And they can make informed decisions in the blink of an eye.

Data Governance

Data pipelines also play a pivotal role in data governance and security. With data privacy and compliance concerns soaring, organizations must fortify their data handling practices. Data pipelines have well-defined and controlled processes. They ensure data integrity and safeguard sensitive information. They prevent data breaches and maintain customer trust.

Scalability

As businesses scale and their data needs grow, the elasticity of data pipelines becomes paramount. These flexible constructs can adapt and expand to accommodate increasing data volumes. And they ensure businesses are well-equipped to handle data deluges without faltering.

In the realm of data analytics, data pipelines act as the precursors to success. Pipelines deliver clean, refined, and readily accessible data. They enable data analysts and data scientists to focus on their core competencies. They explore data’s depths and glean valuable insights without the burden of data management.

Data pipelines stand as the backbone of modern businesses. They enable the efficient and effective handling of data. From data ingestion to transformation, processing to loading, these pipelines easily navigate the complexities of data. They empower organizations to harness the true power of their data and flourish in the data-driven age.

Key Components of a Data Pipeline

The data pipeline is a sophisticated orchestration of essential components. It is like the beating heart of any data-driven enterprise. It comprises a symphony of interconnected stages. Each stage is with a distinct purpose and significance. It ensures the seamless journey of data from origin to destination.

Data Ingestion:

The Data ingestion lies at the inception of the data pipeline. Further, Ingestion is the gateway to the data ecosystem. This crucial stage involves the collection of data from a myriad of sources. Data collection includes databases, APIs, logs, and real-time streams. The data pours in from diverse channels. That demands the pipeline’s versatility to accommodate various data formats and velocities.

Data Storage:

Once the data is gracefully gathered, it seeks refuge in secure data storage repositories like data lakes or data warehouses. These repositories serve as fortified fortresses. They are housing the data until it’s summoned for further processing and analysis.

Data Transformation:

Data, in its raw state, often resembles a chaotic puzzle. The raw data lacks structure and uniformity. Data transformation emerges as the catalyst for order. It encompasses processes like data cleaning, filtering, and normalization. This metamorphosis refines the data into a cohesive and coherent form. And that is preparing it for its next stage in the pipeline.

Data Processing:

As the data transforms, it ventures into the realm of data processing, where it uncovers its full potential. In this realm, data analysts and data scientists wield statistical sorcery. They apply complex algorithms and analytics to extract valuable insights and patterns from the data. This processing stage serves as the foundation for data-driven decision-making and strategic actions.

Data Loading:

The final act of the data pipeline is data loading. At Data loading, the processed and enriched data takes center stage. And that is ready to fuel the organization’s data-driven endeavors. The refined data is located in databases, business intelligence tools, or applications. Here it awaits the eager minds of stakeholders, ready to unveil its secrets.

Data pipelines thrive on their efficiency and automation. The pipelines are alleviating the burden of manual data handling. Further, they act as the architects of data movement. They are orchestrating a symphony of data flow with precision and finesse. These pipelines conduct a harmonious ensemble of diverse data sources. They accommodate structured and unstructured data with ease.

Real-time insights are the jewels in the crown of data pipelines. The ability to process and analyze data in real-time is essential in today’s fast-paced business landscape. Data pipelines enable businesses to seize opportunities. They help to respond to challenges in real-time. And they allow enterprises to adapt swiftly. It helps in staying ahead in the competitive race.

Data pipelines play a crucial role in data governance and security. With data privacy and compliance at the forefront of concerns, these pipelines ensure data integrity and protect sensitive information. It is building and maintaining trust among customers and stakeholders.

Data pipelines showcase their remarkable elasticity as businesses evolve and data volumes surge. They scale effortlessly. They are handling the deluge of data with aplomb. That too, it handles without compromising on efficiency or performance.

The key components of a data pipeline always work together. They enable data-driven organizations to thrive in a data-rich landscape. These components form a powerful ensemble from data ingestion to storage, from transformation to processing. And they empower businesses with actionable insights and strategic advantages. Pipelines remain an indispensable foundation for success in the age of data and analytics.

Data Ingestion

Data-ingestion emerges as the opening act in the grand symphony of data pipelines. It is the gateway to a vast world of information. Like a skillful conductor, it orchestrates gathering data from diverse sources. Each contributes a unique melody to the composition.

Data Collection

At its core, data ingestion involves collecting data from a myriad of channels. These sources can span databases, APIs, logs, streaming platforms, and real-time sensors. All these echoes in harmony contribute to the data pipeline’s melody. The challenge lies in embracing the diversity of data formats and velocities and navigating through structured and unstructured data.

Data Stream

The data stream is like a river of information. The pipeline must embrace the burrstones of incoming data. The data pipeline must remain agile, from sudden spikes in data flow to calm lulls. And it adjusts its tempo to ensure a seamless data flow.

The complexity of data ingestion lies in handling real-time data streams. Data flows incessantly, akin to a heartbeat. It demands immediate processing and analysis. Data ingestion must keep pace with the velocity of incoming data. And it ensures every precious piece of information is recovered.

Resilence of Data Source

Moreover, data pipelines must exhibit resilience in the face of data source changes. New data sources emerge, or existing sources may evolve. Therefore, the pipeline must adapt and harmonize with the changes. And need to maintain the flow of data without interruption.

Data ingestion sets the stage for the entire data pipeline. It lays the groundwork for the subsequent steps of data storage, like transformation, processing, and loading. Like a skilled conductor guiding a symphony, data ingestion directs the data flow. Further, that ensures it moves smoothly through the pipeline. And ready to be refined into beautiful insights.

Data ingestion forms the prelude to the enchanting melody of data pipelines. It is embracing the diversity and burrstones of incoming data. This essential component sets the tempo for the symphony of data flow. And it ensures a harmonious journey from source to destination. As the foundation of data-driven success, data ingestion enables businesses to transform raw data into valuable insights.

Data Transformation

In data pipelines, data transformation emerges as the transformative force. It wields the power to turn raw data into refined brilliance. Data transformation shapes and molds the data. It is chiseling away imperfections and revealing its true potential.

Data Cleaning

Data transformation encompasses a medley of processes. Each contributes to the harmonious whole. Data cleaning, like a gentle breeze, sweeps away inconsistencies and errors. And that ensures the data’s integrity. Filtering, akin to a painter’s brushstroke, carefully selects the relevant pieces of data. And that eliminates noise and distractions. Data normalization is like the tuning of instruments. It aligns the data to a standardized format. And that enables data for seamless analysis.

Adaptability

The complexity of data transformation lies in its adaptability. Each dataset is like a unique instrument in an orchestra. They demand tailored treatment. The transformation process must harmonize with the specific characteristics of the data. And it needs to accommodate variations in structure, format, and content.

Data Preparation

Moreover, data transformation is an iterative dance. As new data arrives and insights are gained, the transformation process evolves. Then it adjusts its steps further to unlock deeper understanding. This iterative nature allows data pipelines to sync with the evolving data landscape. And that ensures its relevance and accuracy.

In the realm of unstructured data, data transformation takes on an artistic flair. Like a poet weaving words into a sonnet, data transformation unearths meaning from text, images, and other unstructured formats. Natural language processing and image recognition become the tools of the data transformation artisan. It unlocks insights hidden in the realms of unstructured data.

Data Processing

At its core, data transformation prepares the data for the data processing stage. It sets the scene for insightful analysis and complex computations. Like a backstage magician, data transformation works its magic. It refines the data into a coherent and consistent format. It is ready to dazzle the audience with its insights.

Data transformation plays a pivotal role in the symphony of data pipelines. It refines raw data into a harmonious ensemble of insights. It does diverse functions and is adaptable naturally. Data transformation serves as the key to unlocking the full potential of data. It empowers businesses to make informed decisions and create a symphony of success.

Data Processing

Data processing is the crescendo of the data pipeline symphony. It takes center stage. It is wielding its analytical prowess to extract meaningful insights from the refined data. Like an ensemble of virtuoso musicians, data processing combines statistical sorcery, advanced analytics, and machine learning. These combined forces reveal patterns and trends hidden within the data’s depths.

In the realm of data analytics, data processing serves as the guiding compass. It is steering businesses towards informed decision-making and strategic actions. Further, it can perform complex computations and statistical analysis. Analytics allows organizations to glean valuable insights. It is akin to discovering treasures buried beneath layers of data.

Versatility

The versatility of data processing is a sight to behold. It effortlessly handles structured data. It is summoning statistical techniques like regression, clustering, and hypothesis testing. And it is to unravel relationships and dependencies. It embraces unstructured data. It employs natural language processing and sentiment analysis to decode the nuances of text and uncover sentiments and opinions.

Training Models

But data processing’s charm goes beyond analysis. In machine learning, data processing dons a magician’s cloak. It is training models to predict outcomes. It classifies data and even generates new insights. Data processing breathes life into intelligent applications, from recommendation engines to image recognition. It enriches the data pipeline’s symphony with innovative possibilities.

Complexity

The complexity of data processing lies in its hunger for computational power. As data volumes grow and analytical algorithms become more intricate, data processing hunger for parallel processing and distributed computing. This insatiable appetite for computational resources demands scalable architectures. The scalable architectures ensure the symphony of data processing resonates with speed and efficiency.

In the dynamic world of business, data processing conducts a real-time symphony. As data streams in, it craves immediate attention. It requires data processing to operate with agility and nimbleness. Real-time data processing paves the way for instantaneous insights. It empowers businesses to seize opportunities and respond to challenges in the blink of an eye.

Data processing takes the spotlight in the symphony of data pipelines. It uses analytical prowess and machine learning magic to transform data into valuable insights. From statistical analysis to machine learning models, data processing reveals the melody hidden within the data. And it empowers businesses to make data-driven decisions and create a harmonious symphony of success.

Data Loading

As the final act of the data pipeline symphony, data loading steps into the spotlight. And it gracefully concludes the data journey from raw sources to actionable insights. Like a diligent conductor, data loading ensures that the refined and processed data finds its rightful place in databases, business intelligence tools, or applications. And the data stands ready to empower stakeholders with valuable information.

Data Transfer

Data loading involves the seamless data transfer from the processing stage to its destination. This destination can vary. And that depends on the specific needs of the organization. It could be a relational database for structured data. It may be a NoSQL database for semi-structured data or a data warehouse for analytical purposes.

The complexity of data loading lies in its ability to handle diverse data formats. And that needs to cater to the specific requirements of the target destination. Different databases may demand different data structures or data models. Therefore it necessitates data loading mechanisms to accommodate these nuances while ensuring data integrity.

Data-driven businesses must consider real-time data loading. Timeliness is essential, and data loading must operate swiftly and accurately. And that will ensure that the latest insights and information are available for immediate decision-making.

Managing Data

Data loading also entails managing the storage and retention of historical data. Over time, data volumes can become substantial. Therefore efficient data loading practices must consider data purging and archiving to maintain optimal performance and storage costs.

Processing

Data loading acts as the bridge connecting data processing to the business world. Once loaded into databases and applications, the data becomes the foundation for reports. Further, they are the resources for dashboards and interactive visualizations. That empowers users to interact with the data and derive actionable insights.

Moreover, data loading is not a one-time affair but an ongoing process. As the data pipeline continues to evolve and new data is generated, data loading must keep pace. And that must ensure that the latest information is seamlessly integrated into the organization’s data ecosystem.

Data loading brings the symphony of data pipelines to a harmonious close. It ensures that refined and processed data finds its rightful place in databases and applications. It can handle diverse formats, manage real-time data, and accommodate evolving needs. With this ability, data loading is the gateway to a world of actionable insights. And it enables businesses to make informed decisions and create a crescendo of success in the data-driven age.

Data Pipeline Workflow: Step-by-Step

The data pipeline workflow is a meticulously choreographed sequence of steps. Each step contributes to the seamless flow of data from source to destination. Like the movements of a well-synchronized dance, each step plays a crucial role in orchestrating the symphony of data processing and analysis.

Let’s delve into the step-by-step journey of a data pipeline.

Data Ingestion

The data pipeline’s journey commences with data ingestion. In this initial step, data from various sources, such as databases, APIs, logs, and streaming platforms, are collected and brought into the data pipeline. This stage requires the pipeline to be agile and versatile. And that should be capable of accommodating diverse data formats and handling real-time data streams.

Data Storage

Once the data is gathered, it seeks sanctuary in storage repositories like data lakes or data warehouses. These repositories serve as centralized reservoirs. They store the data securely until it’s ready for further processing and analysis.

Data Transformation

The data must transform to reveal its real information. Data transformation involves cleansing, filtering, and reshaping the data. And that ensures it’s consistent and in a usable format for analysis. This stage plays a pivotal role in refining the data for insightful exploration.

Data Processing

As the data undergoes its metamorphosis, it moves to the data processing stage. Here, the data pipeline performs statistical analysis. And it applies advanced analytics. And it even utilizes machine learning algorithms to extract valuable insights from the data. This step is the heart of the data pipeline. At which the true magic of data-driven decision-making takes place.

Data Loading

With the data now processed and enriched with insights, it’s time for data loading to shine. The refined data finds its home in databases, business intelligence tools, or applications in this final step. There, it awaits the eager minds of stakeholders, ready to unveil its secrets. This step ensures the data is readily available for reporting, dashboarding, and real-time applications.

Data Consumption

Beyond the data pipeline’s boundaries, the data continues its journey into the hands of end-users and stakeholders. They consume and interact with the data. They derive valuable insights to inform business strategies and drive informed decision-making.

Monitoring and Maintenance

The data pipeline’s workflow doesn’t end with data consumption. Monitoring and maintenance are essential to ensure the pipeline’s health and performance. Regular checks for data quality and data completeness are imperative. Further, we need to check whether performance optimizations are conducted to ensure the pipeline operates smoothly.

The data pipeline workflow unfolds like a well-orchestrated dance. It moves from data ingestion to data storage. And then move on to transformation, processing, and loading. Each step plays a vital role in refining and enriching the data. And finally, it delivers data insights to organizations to empower businesses with valuable information. Monitoring and maintenance ensure the pipeline’s continued success as the data-driven journey continues. It enables organizations to thrive in the ever-changing world of data and analytics.

Data Transformation: Cleaning, Filtering, and Preparing Data

Data transformation is a pivotal act in the data pipeline. It assumes the role of a master craftsman. It is molding and refining the raw data into a polished masterpiece. Data transformation employs various techniques such as cleaning, filtering, and preparation to ensure the data is ready to shine in the spotlight of analysis.

Data Cleaning

The first step in data transformation involves data cleaning. Like dusting off cobwebs from a hidden treasure, data cleaning aims to eliminate inconsistencies, errors, and missing values in the data. Imputation techniques may come into play. And that fills in missing data points to maintain data integrity.

Data Filtering

Data filtering carefully selects the relevant data needed for analysis. Filtering allows the pipeline to focus on specific subsets of data, discarding noise and irrelevant information. Further, it channels attention to the critical elements composing the insights symphony.

Data Preparation

Data preparation harmonizes the data into a consistent format. This stage standardizes units, scales, and formats. And it ensures that the data speak a common language. In addition, data preparation facilitates smooth collaboration between different data sources.

Data Trnsformation Challenges

Data transformation also tackles the challenge of handling outliers. The outliers are the extraordinary data points that deviate from the norm. Outliers may be corrected, removed, or transformed to prevent their disruptive influence on the analytical outcomes. Thus the data transformation preserves the integrity of the data symphony.

In the realm of unstructured data like textual content, data transformation becomes a linguistic maestro. Natural language processing (NLP) techniques take center stage. NLP parses the text, extracts sentiment, and recognizes entities. It paints a vivid portrait of insights from the written word.

Complexity

Moreover, data transformation embraces the power of feature engineering. Feature engineering selects, creates, and combines relevant data attributes. And it enhances the predictive power of the machine learning model. Further, it adds depth to the analytical performance.

Adaptability

The complexity of data transformation lies in its adaptability. Each dataset requires a tailored approach, with specific techniques harmonized to suit the data’s unique characteristics. This adaptability ensures the data pipeline remains agile, capable of handling the ever-changing data landscape.

Data transformation stands as a masterful act in the symphony of data pipelines. It is refining raw data into valuable insights. Data transformation crafts a harmonious composition of data through cleaning, filtering, and preparation. It is ready to enrich the data pipeline with its brilliance. Embracing structured and unstructured data, handling outliers, and feature engineering, data transformation reveals the symphony of insights hidden within the data and that empowering businesses to make informed decisions and create a crescendo of success.

Data Processing: Gaining Insights and Applying Analytics

In the symphony of data pipelines, data processing takes center stage. It is wielding its analytical prowess to extract meaningful insights and apply advanced analytics. Data processing orchestrates a masterpiece of information. They empower businesses to make informed decisions and chart a path to success.

Data processing starts by conducting a comprehensive analysis of the refined data. With statistical techniques, data processing explores relationships, uncovers patterns, and derives meaningful trends from the data. Descriptive statistics showcase the data’s central tendencies, while inferential statistics delve deeper into drawing conclusions and making predictions.

Data Analysis

Regression analysis, a virtuoso performance, uncover the relationships between variables. It allows businesses to understand the impact of one variable on another. Data processing guides the data through the intricate dance of regression. And it reveals causal connections and potential dependencies.

In unsupervised learning, clustering techniques harmonize the data into distinct groups. And that provides valuable insights into segmenting customers, products, or other entities based on similarities. Clustering creates harmonious data groupings, offering valuable segmentation strategies.

Testing

Hypothesis testing validates assumptions and inferences drawn from the data. It confirms whether observed differences are significant or merely a result of chance. And that will lead businesses toward confident decisions.

Data Training

Data processing trains machine learning models in supervised learning to predict outcomes and classify data. Machine learning models rise to their peak performance. They provide accurate predictions and classifications based on historical patterns and features.

Time-series analysis embraces data with temporal dimensions. It models and forecasts future trends. And it enables businesses to anticipate changes and plan accordingly.

Sentiment Analysis

Sentiment analysis, a linguistic marvel, decodes the emotions and opinions hidden within textual data. It determines whether sentiments are positive, negative, or neutral. It paints a vivid picture of customer feedback and market perceptions.

Data processing leverages the power of big data. It performs parallel processing and distributes computing to accommodate vast volumes of data. The scales of data processing amplify the analytical capabilities. And that allows for high-performance insights and real-time processing.

Data processing shines as the guiding star in the symphony of data pipelines. It extracts insights and applies advanced analytics to empower businesses. With statistical techniques, machine learning models, and the ability to handle big data, data processing plays a major role. It conducts a harmonious performance of data-driven decisions. And it is charting a path toward success and achieving a crescendo of achievements.

Data Loading: Storing Data for Easy Access

As the final movement in the symphony of data pipelines, data loading takes the spotlight, gracefully concluding the journey of data from its refined state to its designated destination. Data loading ensures that the enriched data finds its rightful place in databases, business intelligence tools, or applications. And these refined data stand ready to empower stakeholders with valuable information.

Data Transfer

Data loading involves the seamless data transfer from the processing stage to its designated storage. This destination may vary, depending on the organization’s specific needs – it could be a relational database for structured data, a NoSQL database for semi-structured data, or a data warehouse for analytical purposes.

The complexity of data loading lies in its ability to handle diverse data formats and cater to the specific requirements of the target destination. Different databases may demand distinct data structures or data models. Further, it necessitates data loading mechanisms to accommodate these nuances while ensuring data integrity.

Timeliness

Data-driven businesses must consider the importance of real-time data loading. Timeliness is the essence. And data loading must operate with swiftness and accuracy. They ensure that the latest insights and information are available for immediate decision-making.

Storage and Retention

Data loading entails managing the storage and retention of historical data. Over time, data volumes can become substantial, and efficient data loading practices must consider data purging and archiving to maintain optimal performance and storage costs.

Processing

Data loading acts as the bridge connecting data processing to the business world. Once loaded into databases and applications, the data becomes the foundation for reports, dashboards, and interactive visualizations. The data loading empowers users to interact with the data and derive actionable insights.

Data loading is not a one-time affair; it is an ongoing process. As the data pipeline continues to evolve and new data is generated, data loading must keep pace. And it ensures that the latest information is seamlessly integrated into the organization’s data ecosystem.

Therefore, data loading brings the symphony of data pipelines to a harmonious close. And it ensures that the refined and processed data finds its rightful place in databases and applications. With its ability to handle diverse formats, manage real-time data, and accommodate evolving data needs, data loading is the gateway to a world of actionable insights, enabling businesses to make informed decisions and create a crescendo of success in the data-driven age.

Data Pipeline Orchestration and Automation

Orchestration platforms coordinate the activities of different components in the data pipeline. They enable smooth transitions between stages. They ensure data moves through the pipeline in an orchestrated and synchronized manner. Workflow management tools guide the data’s journey. They direct the execution of tasks, dependencies, and time-critical operations.

Data Automation

Automation takes center stage, relieving data engineers from manual intervention and repetitive tasks. Automation scripts and workflows become the virtuoso performers. They are executing tasks with precision and accuracy and reducing the risk of human errors.

Dynamic Nature of Pipelines

The beauty of orchestration and automation lies in their ability to cater to the dynamic nature of data pipelines. As new data sources emerge or business needs evolve, orchestration platforms flexibly adapt to accommodate changes. And they ensure a harmonious performance.

Parallelism

Parallelism enhances the data pipeline’s performance. Orchestration platforms distribute tasks across multiple nodes. And that enables simultaneous processing and significantly reduces data processing time.

Data Dependencies

Moreover, orchestration platforms gracefully handle data dependencies. They ensure that downstream tasks only execute when their required data is available. The data pipeline orchestration ensures that each stage awaits the completion of its predecessors before proceeding.

Error Handling

With intelligent monitoring and error handling, orchestration platforms detect performance bottlenecks or errors in real-time. And they ensure the data pipeline performs at its best.

Resource Management

The sophistication of orchestration and automation extends to resource management. They allocate computational resources optimally. By dynamically provisioning resources based on workload requirements, orchestration platforms keep the data pipeline humming efficiently.

Cloud Solutions

Cloud-based solutions further elevate performance. They enable scalable and cost-effective data pipeline management. Cloud-based orchestration platforms embrace elasticity. They dynamically adjust resources as data volumes fluctuate, while pay-as-you-go models alleviate excessive costs.

Data pipeline orchestration and automation harmonize the data pipeline’s performance. They ensure a flawless and captivating symphony of data processing. By coordinating tasks, automating operations, and adapting to changing demands, orchestration platforms empower data-driven organizations to unlock the full potential of their data. And they create a crescendo of success in the dynamic world of data and analytics.

The Role of Orchestration Tools

In the performance of data pipelines, orchestration tools take center stage. They assume the role of guiding and harmonizing the various components of the data pipeline. These tools ensure that data flows seamlessly through each stage. In addition, they orchestrate the execution of tasks and dependencies. Besides, they organize data movement to create harmonious data processing.

At its core, the role of orchestration tools is to coordinate and manage the workflow of data processing tasks. They serve as the baton that guides the data through the pipeline. They orchestrate the order in which tasks are executed and ensure that data moves from one stage to another synchronized.

Flexibility

Orchestration tools bring flexibility to the data pipeline. They enable adaptability to changing data processing requirements. As business needs evolve and new data sources emerge, these tools flexibly accommodate modifications in the workflow. These tools allow for agile and efficient data processing.

Parallelism is one of the key strengths of orchestration tools. They excel at distributing tasks across multiple computing nodes. And they allow for the simultaneous execution of tasks and significantly reducing data processing time. This parallel processing capability enhances the overall efficiency of the data pipeline. And it enables faster insights and analysis.

Dependency

In the dynamic landscape of data processing, dependencies between tasks are inevitable. Orchestration tools adeptly handle data dependencies. And they ensure that downstream tasks wait for their required data to be available before execution. This dependency management ensures the accuracy and completeness of data processing results.

Error Handling

Another vital role of orchestration tools is error handling and monitoring. They continuously monitor the data pipeline’s performance and detect any anomalies or errors in real-time. With proactive error handling, they swiftly address issues to maintain the pipeline’s stability and reliability.

Scalability

Cloud-based orchestration tools offer scalability and cost-effectiveness. These tools optimize resource allocation by dynamically provisioning computing resources based on workload demands. They efficiently scale up or down as data volumes fluctuate. The pay-as-you-go model minimizes unnecessary costs. And that makes cloud-based orchestration a popular choice for many organizations.

Furthermore, orchestration tools facilitate the integration of diverse data sources and destinations. They can seamlessly connect to various databases, data lakes, and cloud storage. In addition, these tools enable smooth data movement between these platforms, enriching the data pipeline’s capabilities.

The role of orchestration tools in the data pipeline is to harmonize the performance of data processing tasks. With workflow management, parallel processing, dependency handling, error monitoring, and seamless data integration, these tools elevate the data pipeline’s efficiency and reliability. By orchestrating the data symphony with finesse, they empower businesses to make data-driven decisions, creating a symphony of success in the ever-evolving data and analytics landscape.

Advantages of Data Pipeline Automation

Data pipeline automation brings a myriad of advantages. That elevates the overall performance of data pipelines. With its efficiency and precision, data pipeline automation benefits the organization’s data-driven landscape.

Improved Efficiency

Automation eliminates the need for manual intervention in repetitive data processing tasks. It conducts these tasks with consistent accuracy and speed. And that reduces the time and effort required for data processing.

Reduced Errors

The automation virtuoso minimizes human errors that can occur during manual data processing. Automation ensures data integrity and reliability by adhering to predefined rules and processes.

Time Savings

Automation expedites data processing by streamlining the workflow and handling tasks in parallel. This time savings allows businesses to gain insights and make decisions faster, gaining a competitive edge.

Scalability

As data volumes fluctuate, automation dynamically adjusts resource allocation. And that ensures scalability. This elasticity accommodates data growth and changing processing demands without manual intervention.

Consistency

Automation ensures consistent data processing across all instances. This consistency fosters reliable and comparable results. And that enhances data quality.

Real-Time Data Processing

Data Automation facilitates real-time data processing. It enables businesses to respond swiftly to emerging trends and events, leading to agile decision-making.

Adaptability

Data pipeline automation is flexible. It accommodates changes in data sources. Further, it can change processing requirements and business needs. This adaptability ensures that the pipeline remains agile and responsive to evolving conditions.

Cost-Effectiveness

By optimizing resource utilization and reducing the need for manual labor, automation lowers operational costs associated with data processing.

Centralized Management

The Automation platforms offer centralized management. Further automation provides a holistic view of the data pipeline. This centralized control streamlines monitoring, error handling, and maintenance.

Elevated Data Quality

With predefined rules and consistent processing, data pipeline automation enhances data quality. It ensures that insights derived from the data are reliable and accurate.

Enhanced Data Governance

Automation adheres to predefined data governance policies. And it promotes compliance and data security throughout the data processing journey.

Continuous Availability

Automated data pipelines can run continuously. And they ensure that data processing is available around the clock. It supports global operations and real-time decision-making.

The advantages of data pipeline automation empower organizations with improved efficiency. They reduced errors and time savings. In addition, they enhance scalability. And they improve data quality. With its adaptability, cost-effectiveness, and centralized management, automation performs seamlessly. Automation embraces real-time data processing and elevates data governance. By embracing automation of the data pipeline, businesses embark on a journey of increased productivity and data-driven insight. And they can provide a crescendo of success in the modern data landscape.

Choosing the Right Automation Framework

Selecting the perfect automation framework for data pipelines is very crucial. It requires careful consideration of various factors to ensure that the chosen framework harmonizes with the organization’s unique needs. And that can do data processing with finesse. Let’s explore the key aspects when selecting the right automation framework.

Compatibility and Integration

The chosen framework must seamlessly integrate with the existing data infrastructure, tools, and technologies used within the organization. Compatibility ensures a smooth data flow and reduces the effort required for integration.

Scalability:

As the data pipeline expands and the volume of data grows, the automation framework should be scalable to handle the increased load without compromising performance or data accuracy.

Flexibility and Adaptability

Data environments are constantly evolving. The automation framework should be flexible enough to accommodate changes in data sources, processing requirements, and business workflows. It should adapt to new technologies and industry standards as they emerge.

Ease of Use

The automation framework should be user-friendly and accessible to technical and non-technical users. A well-designed interface and clear documentation simplify the onboarding process and reduce the learning curve.

Error Handling and Monitoring

An effective automation framework should have robust error-handling and monitoring capabilities. It should promptly detect and address errors. Further, it should provide real-time alerts and insights into the data pipeline’s health.

Community Support and Updates

Consider choosing a framework with an active community of users and developers. Regular updates and support from the community ensure that the framework remains up-to-date, secure, and reliable.

Security and Data Governance

Data security is paramount in any data pipeline. The automation framework should offer robust security features and adhere to data governance policies. And they should ensure data confidentiality and compliance.

Performance and Execution Time

Evaluate the framework’s performance and execution time for different data processing tasks. A high-performing framework can significantly impact the efficiency of the data pipeline.

Cost-Effectiveness

Assess the cost of implementing and maintaining the automation framework. Consider upfront and long-term expenses, including licensing fees, resource requirements, and ongoing support.

Cloud Compatibility

If your organization operates in a cloud environment, consider an automation framework that seamlessly integrates with popular cloud services. Cloud compatibility offers scalability, cost-effectiveness, and elastic resource allocation.

Support for Diverse Data Sources

Ensure that the automation framework supports a wide range of data sources and formats since the data in modern organizations is often generated from various systems and applications.

Future-proofing

Anticipate the future needs of your data pipeline. Select a framework that not only meets current requirements but can also accommodate future advancements and challenges.

Choosing the right automation framework for data pipelines requires thoughtful evaluation of compatibility, scalability, flexibility, ease of use, error handling, community support, security, performance, cost-effectiveness, cloud compatibility, data source support, and future-proofing. By conducting this comprehensive assessment, organizations can confidently select the right framework that does the data processing. And it leads them toward a successful data-driven future.

Challenges in Data Pipeline Design and Management

Designing and managing data pipelines can do various components and stages to ensure a seamless data flow. However, like any performance, there are challenges that data engineers and organizations must face and overcome. Let’s explore the key challenges in data pipeline design and management.

Data Complexity and Diversity

In today’s data landscape, data comes in various formats, structures, and sources. Managing the complexity and diversity of data can be challenging since different data types may require specific processing techniques and integration methods.

Data Quality and Integrity

Ensuring data quality and integrity throughout the pipeline is critical. Errors and inconsistencies in data can have far-reaching consequences. And they impact downstream analysis and decision-making. Maintaining data quality requires robust validation and cleansing mechanisms.

Data Governance and Compliance

As data pipelines deal with sensitive information, data governance, and compliance become significant challenges. Adhering to data privacy regulations and ensuring data security throughout the pipeline are essential to avoid legal and reputational risks.

Scalability and Performance

As data volumes grow, scalability becomes a concern. Designing pipelines that can handle increasing data loads while maintaining performance requires careful consideration of resource allocation and data partitioning strategies.

Real-Time Data Processing

Organizations increasingly demand real-time data insights. Designing data pipelines that can process and deliver real-time data while managing the associated latency and throughput challenges can be complex.

Dependency Management

Data pipelines often consist of interdependent tasks. Managing data dependencies and ensuring that downstream tasks wait for required data to be available can be intricate, especially in distributed computing environments.

Error Handling and Monitoring

Detecting and handling errors in data pipelines is crucial to maintain data integrity and pipeline reliability. Implementing effective error handling and monitoring mechanisms, especially in complex workflows, can be challenging.

Cost Optimization

Data pipeline design involves balancing cost and performance and optimizing resource usage to minimize operational costs while meeting processing requirements can be a delicate task.

Versioning and Deployment

Managing multiple versions of data pipelines, ensuring seamless deployment, and handling backward compatibility is essential to enable iterative development and maintain a stable production environment.

Integration with Existing Systems

Integrating data pipelines with existing IT infrastructure and tools can be challenging. Ensuring compatibility and smooth data exchange between systems is crucial for efficient data processing.

Documentation and Collaboration

Proper documentation and collaboration are vital for successful data pipeline management. Ensuring the pipeline’s design, configuration, and changes are well-documented helps troubleshoot and knowledge sharing among team members.

Data Lineage and Traceability

Maintaining data lineage and traceability is critical for data governance and compliance. Tracking the origin and movement of data across the pipeline can be complex, especially in distributed and multi-stage architectures.

Data pipeline design and management present various challenges, from handling data complexity and ensuring data quality to addressing scalability, real-time processing, and compliance requirements. Overcoming these challenges requires thoughtful planning, advanced technologies, and continuous monitoring and optimization. By facing these challenges head-on and finding innovative solutions, organizations can conduct a symphony of data pipelines that deliver valuable insights and support informed decision-making.

Dealing with Data Variety, Volume, and Velocity

Dealing with the three V’s of data – Variety, Volume, and Velocity – is a significant challenge in modern data pipeline design and management. Each V represents a unique aspect of data that organizations must tackle to ensure effective data processing and decision-making.

Data Variety

In today’s data-driven world, data comes in diverse formats. That includes structured, semi-structured, and unstructured data. The variety extends beyond traditional databases to include data from social media, logs, images, videos, and more. Handling this diverse data requires data pipelines that can seamlessly ingest, process, and integrate different data types.

The Data Volume

With the exponential growth of data, organizations face the challenge of handling massive data volumes efficiently. Traditional data processing techniques may struggle to cope with the sheer size of data, leading to performance bottlenecks. Scalable data pipelines capable of distributed computing are essential for large-scale data processing.

Data Velocity

Data is generated and updated at an unprecedented pace, often in real-time. Organizations need to process and analyze streaming data swiftly to derive timely insights. Data pipelines must be equipped to handle high data velocity. And the pipelines should enable real-time or near-real-time data processing.

To address these challenges, organizations can employ several strategies:

Data Integration and Transformation

Implement data integration and transformation processes to unify diverse data sources and formats. Extract, transform, and load (ETL) tools or data integration platforms facilitate this process. And this ensures data consistency and quality.

Distributed Computing

Leverage distributed computing frameworks like Apache Hadoop or Apache Spark to parallelize data processing tasks. And they enable horizontal scalability and efficient handling of large data volumes.

Real-time Stream Processing

Introduce real-time stream processing systems like Apache Kafka or Apache Flink to handle high-velocity data streams. These systems enable the processing of data as it arrives. And it allows for real-time analytics and decision-making.

Data Preprocessing and Quality Assurance

Prioritize data preprocessing and quality assurance steps in the data pipeline to ensure data cleanliness and reliability. Addressing data quality issues early in the pipeline helps avoid downstream complications.

Cloud-Based Solutions

Cloud platforms offer elastic scalability and pay-as-you-go models. This makes them ideal for handling varying data volumes and processing demands. Cloud services can dynamically scale resources as data volumes fluctuate.

Data Governance and Metadata Management

Establish robust data governance practices to manage data lineage, traceability, and compliance. Metadata management tools help track data characteristics and facilitate data discovery.

Machine Learning and AI

Implement machine learning and AI algorithms to automate data processing tasks, data classification, and anomaly detection. These technologies can enhance data pipeline efficiency and reduce manual intervention.

Monitoring and Performance Optimization

Implement monitoring and logging mechanisms to track data pipeline performance and identify bottlenecks. Regularly optimize the pipeline for improved efficiency.

By combining these strategies, organizations can effectively tackle the challenges of data variety, volume, and velocity. A well-designed and managed data pipeline enables organizations to unlock the full potential of their data. They facilitate data-driven insights and support strategic decision-making.

Ensuring Data Quality and Integrity

Ensuring data quality and integrity is akin to maintaining the purity of a harmonious symphony. The accuracy and reliability of data are crucial for making informed decisions and driving meaningful insights. Data engineers and organizations conduct a series of meticulous practices to achieve the desired level of data quality and integrity. That can avoid discordant notes in the data pipeline.

Data Validation and Cleansing

A foundational step in ensuring data quality is data validation and cleansing. Data engineers implement validation rules and perform data cleansing to identify and rectify inaccuracies, inconsistencies, and missing values. This process harmonizes the data. And that ensures it adheres to predefined standards and rules.

Standardization and Normalization

Diverse data sources often introduce variations in data formats and representations. Standardizing and normalizing data enable consistent data structures. And that facilitates seamless integration and comparison across different data sets.

Duplicate Detection and Deduplication

Duplicates in data can lead to misleading results and skewed insights. Engineers eliminate redundancies and achieve a clear and concise data composition by employing duplicate detection and deduplication techniques.

Data Profiling and Metadata Management

The Data profiling involves analyzing the raw data to understand its characteristics, like data distributions, patterns, and uniqueness. Metadata management captures essential information about data sources, transformations, and lineage. That facilitates data discovery and traceability.

Data Governance Policies

Implementing robust data governance policies ensures data adheres to predefined guidelines and standards. Data governance frameworks establish a symphony of control and security. And it safeguards data integrity and promotes compliance with regulatory symphonies.

Error Handling and Monitoring

Data pipelines must have error-handling mechanisms to detect, log, and address errors during data processing. Real-time monitoring allows data engineers to conduct timely interventions. And it ensures data quality is upheld throughout the pipeline.

Quality Metrics and Reporting

Defining data quality metrics enables organizations to measure and track data quality over time. Regular quality reports provide insights into the effectiveness of data quality measures. And that guides continuous improvement efforts.

User Feedback and Validation

Data users play a pivotal role in ensuring data quality. Collecting user feedback and validating data against real-world scenarios help identify discrepancies and areas for improvement. That leads to a harmonious symphony of user satisfaction.

Data Integration and Transformation

Data integration and transformation processes impact data quality significantly. Smooth integration and transformation workflows ensure data flows harmoniously across the pipeline. And it reduces the likelihood of data degradation.

Automated Testing and Validation

The Automation plays a virtuoso role in data quality assurance. Automated testing and validation processes continuously check data integrity. And that enables rapid detection and correction of data anomalies.

Ensuring data quality and integrity requires systematic practices. From data validation and cleansing to error handling, data governance, and user validation, data engineers and organizations compose a unique way that creates reliable and accurate data quality and integrity. Organizations can elevate their data-driven performance by adopting these practices and continuously refining data quality measures. And that resonates with the success of informed decision-making and strategic excellence.

Handling Errors and Failures in the Pipeline

Handling errors and failures in the data pipeline is akin to maintaining the rhythm and flow of data amidst unforeseen challenges. In the dynamic world of data processing, errors and failures are inevitable. And data engineers must conduct the best ensemble of strategies to manage and recover from these disruptions gracefully. Let’s explore the key approaches to handling errors and failures in the data pipeline.

Graceful Error Handling

Implementing graceful error handling is essential to prevent data processing interruptions. And it ensures the continuity of the data pipeline. When errors occur, data engineers orchestrate error-handling routines that capture relevant information about the error, log it for analysis, and initiate appropriate recovery actions.

Monitoring and Alerting

Data engineers set up monitoring and alerting systems to continuously observe the health and performance of the data pipeline. Real-time monitoring enables rapid detection of anomalies and deviations from expected behavior. Alerts promptly notify data engineers of critical issues. And that allows them to take immediate action.

Automated Retry Mechanisms

Data engineers incorporate automated retry mechanisms in the data pipeline to address transient errors. When a task encounters a transient error, the pipeline retries the operation after a brief delay. And this reduces the likelihood of failure due to temporary issues.

Backoff Strategies

Employing backoff strategies is beneficial when dealing with repeated failures. Instead of immediately retrying a failed task, data engineers introduce incremental delays between retries to avoid overwhelming the system and potentially causing cascading failures.

Fault Tolerance and Redundancy

Embracing fault-tolerant design principles, data engineers introduce redundancy in critical components of the data pipeline. Redundancy ensures that the pipeline can seamlessly switch to an alternative resource if a component fails. And that can mitigate the impact of failures.

Failover Mechanisms

Data engineers establish failover mechanisms to address failures in distributed environments. Failover allows the data pipeline to switch to a backup system or node if the primary one becomes unavailable. Thus it ensures uninterrupted data processing.

Rollback and Compensation

In the event of a failure during data processing, data engineers implement rollback and compensation mechanisms. Rollback ensures that any changes made before the failure are undone to maintain data consistency, while compensation actions correct any potential inconsistencies caused by the failure.

Data Reprocessing and Recovery

Data engineers design the data pipeline to support data reprocessing and recovery. They maintain checkpoints or snapshots of data at different stages. They can reprocess data from a specific point in case of failures. And that brings the pipeline back on track.

Failure Analysis and Root Cause Identification

Data engineers conduct thorough failure analysis when errors occur to identify the root causes. Understanding the underlying issues helps in implementing preventive measures to avoid similar failures in the future.

Testing and Simulation

Data engineers conduct rigorous testing and simulation exercises to assess the pipeline’s resilience against various failure scenarios. These tests allow them to identify weaknesses and optimize the pipeline for improved fault tolerance.

Handling errors and failures in the data pipeline requires perfect error handling and real-time monitoring. Further, they should enable automated retry mechanisms and fault tolerance. In addition, they should have redundancy, failover mechanisms, and rollback compensation. Besides, it should ensure data reprocessing, failure analysis, and testing. By orchestrating strategies, data engineers ensure the data pipeline’s reliability and resilience. And they can navigate the complexities of data processing and maintain a smooth symphony of data-driven insights.

Best Practices for Building Effective Data Pipelines

Building effective data pipelines requires the best composition of best practices. And that ensures smooth data flow and reliable insights. Data engineers organize these practices to achieve success in data processing. Let’s explore the best practices for building effective data pipelines.

Define Clear Objectives

Start by defining clear objectives and requirements for the data pipeline. Understand the specific data processing needs and desired outcomes to ensure the pipeline aligns with business goals.

Data Quality and Validation

Prioritize data quality and validation to maintain the integrity of the data. Implement data validation rules, cleansing, and transformation processes to ensure consistent and accurate data flow.

Modular and Scalable Design

Design the data pipeline modular and scalable. Break down the pipeline into reusable components. That needs to allow easy modification and expansion as data needs to evolve.

Automate Data Ingestion

Automate data ingestion from various sources to streamline the data flow. Use tools and technologies that efficiently handle diverse data formats and streaming data sources.

Use Distributed Computing

Embrace distributed computing frameworks to parallelize data processing tasks. And Distributed computing ensures efficient handling of large data volumes and real-time data streams.

Data Security and Privacy

Prioritize data security and privacy throughout the pipeline. Implement encryption, access controls, and data anonymization techniques to protect sensitive information.

Monitoring and Alerting

Set up monitoring and alerting systems to track pipeline performance and detect anomalies. Timely alerts enable quick intervention and prevent potential disruptions.

Error Handling and Recovery

Plan for graceful error handling and recovery mechanisms. Implement retry strategies, backoff mechanisms, and failover processes to address failures and maintain data continuity.

Documentation and Version Control

Maintain comprehensive documentation for the data pipeline, like design decisions, configurations, and dependencies. Use version control to track changes and enable collaboration among team members.

Data Lineage and Auditing

Establish data lineage and auditing mechanisms to track data flow and ensure regulatory compliance. Data lineage helps trace the origin and movement of data across the pipeline.

Testing and Validation

Conduct thorough testing and validation of the data pipeline. Perform unit tests, integration tests, and end-to-end validation to ensure the pipeline’s reliability and accuracy.

Continuous Integration and Deployment

Implement continuous integration and deployment (CI/CD) practices to streamline pipeline updates and ensure consistent and efficient development.

Optimization and Performance Tuning

Continuously optimize the data pipeline for better performance and resource utilization. Monitor processing times, bottlenecks, and resource usage, making necessary adjustments to enhance efficiency.

Backup and Disaster Recovery

Implement backup and disaster recovery strategies to safeguard against data loss or pipeline failures. Regularly backup data and create redundancy to ensure business continuity.

Data Governance and Compliance

Adhere to data governance principles and compliance requirements. Ensure that the pipeline adheres to relevant data regulations and policies.

In all, building effective data pipelines demands a symphony of best practices. Data engineers create the best possible composition of efficient and reliable data pipelines by defining clear objectives. They prioritize data quality using a modular design. Further, they automate data ingestion. And they embrace distributed computing. In addition, it ensures data security. Monitoring, error handling, documentation, testing, and optimization contribute to the success of the data pipeline, supporting data-driven insights and strategic decision-making.

Data Governance and Security

Data governance and security form the heart of a well-orchestrated symphony in the world of data management. Like a conductor guiding each section of the orchestra, data governance defines the rules and standards that harmonize data practices across the organization, while data security acts as a vigilant guardian, protecting data from potential threats. Let’s delve into the significance of data governance and security in orchestrating a successful data-driven performance:

Data Governance:

Data Policies and Standards: Data governance establishes a harmonious composition of policies and standards that govern how data is collected, stored, processed, and used. These guidelines ensure data consistency and uniformity, promoting a seamless data flow across the organization.

Data Ownership and Stewardship: Just like a conductor assigning roles to musicians, data governance defines data ownership and stewardship responsibilities. This clarity ensures that data is managed by accountable stakeholders, leading to a harmonious symphony of data governance.

Data Access and Permissions: Data governance orchestrates the allocation of access and permissions, ensuring that only authorized personnel can access specific data. This practice safeguards against unauthorized data manipulation and protects sensitive information.

Data Quality Management: Data governance establishes data quality management practices, ensuring data conforms to predefined standards. By harmonizing data quality initiatives, data engineers create reliable data that resonates with the symphony of accurate insights.

Data Security:

Encryption and Access Controls: Data security employs encryption techniques to transform data into unreadable code, ensuring that only authorized users can decipher it. Access controls create a harmonious fortress, preventing unauthorized access and protecting data from potential breaches.

Identity and Authentication: Data security authenticates user identities, like a vigilant gatekeeper, granting access only to verified users. Multi-factor authentication adds layers of security, ensuring a harmonious symphony of data protection.

Data Privacy and Compliance: Data security is pivotal in adhering to data privacy regulations. Harmonizing data practices with privacy laws ensures that data is handled responsibly, respecting individuals’ rights to privacy.

Monitoring and Threat Detection: data security employs monitoring and threat detection systems. These systems alert data engineers of potential security breaches, enabling timely intervention and remediation.

Data Governance and Security

Data governance and security are interconnected and interdependent. Following these practices ensures data flows smoothly within a secure and compliant environment.

Collaborative Data Management: Effective data governance encourages collaboration between data governance and security teams. This collaboration ensures that data policies and security measures align seamlessly. And that fosters data protection.

Risk Management: Data governance and security work to assess and mitigate risks. By identifying potential threats and vulnerabilities, data engineers create risk management strategies that protect data from harm.

Data governance and security are essential in data management. With data governance defines the rules and standards for data practices and security, protecting data from potential threats. The organizations create a harmonious ensemble of data-driven insights. It is collaborative with data management, risk mitigation, and adherence to data privacy regulations. Data governance and security orchestrate a successful data-driven performance. That brings the success of informed decisions and strategic excellence.

Scalability and Performance Optimization

Scalability and performance optimization form the section in data pipelines. Data engineers implement these practices to ensure efficient data processing performance. Let’s explore the significance of scalability and performance optimization in creating a symphonic data pipeline.

Scalability

Horizontal Scaling:

Data engineers employ horizontal scaling. They add more resources like servers or nodes to the pipeline. This scalable approach ensures the pipeline can handle increasing data volumes and growing demands. They can maintain a proper workload even during peak loads.

Distributed Processing:

With the help of distributed frameworks, Data engineers divide data tasks into smaller and parallel tasks. This approach accelerates data processing. In addition, it enhances performance and efficiency.

Cloud Infrastructure:

Embracing cloud technology, data engineers ensure scalability. Cloud platforms provide flexible and scalable resources. And they allow data pipelines to adjust to varying workloads dynamically.

Performance Optimization

Indexing and Partitioning:

Data engineers use indexing and partitioning techniques to organize data for quicker access. This performance optimization ensures efficient data retrieval and processing.

Caching:

Data engineers employ caching mechanisms. Caching stores frequently accessed data in memory. It reduces data retrieval time and creates a symphony of faster data processing.

Compression:

Data engineers increase efficiency using data compression. Compressing data reduces storage space and improves data transmission speed. And the data compression facilitates a uniform flow of data throughout the pipeline.

Performance Monitoring and Tuning

Real-time Monitoring:

Data engineers set up real-time monitoring of the pipeline’s performance. This monitoring provides timely insights into bottlenecks or anomalies. And that enables quick intervention to keep the pipeline in tune.

Profiling and Benchmarking:

Data engineers conduct profiling and benchmarking, analyzing the pipeline’s performance metrics. By understanding the pipeline’s strengths and weaknesses, they fine-tune the components for optimal efficiency.

Resource Optimization:

Data engineers optimize resource allocation. They allocate resources efficiently to different tasks. Thereby they ensure a uniform allocation that maximizes the pipeline’s performance.

Load Balancing

Load Balancers:

Loadbalancers serve as conductors. They distribute work evenly among multiple servers or nodes. This uniform load distribution prevents overburdening specific resources. And that optimizes performance and maintains stability.

Dynamic Resource Allocation:

Data engineers create a uniform balance with dynamic resource allocation. Resources are dynamically assigned based on workload demands. And that allows the pipeline to adapt to changing performance needs.

Scalability and performance optimization are integral parts of the work of data pipelines. They handle horizontal scaling and distributed processing. In addition, they employ cloud infrastructure and indexing. Besides, they employ partitioning, caching, and compression of data. Further, they are monitoring, tuning, load balancing, and dynamic resource allocation. Data engineers organize a uniform ensemble of efficient data processing. This approach ensures that data pipelines can handle growing demands.

Further, they maintain optimal performance. In addition, they deliver reliable and timely insights. And that resonates with the success of data-driven decisions and strategic achievements.

Monitoring and Alerting for Data Pipelines

Monitoring and alerting serve as the vigilant sentinels. And it is keeping a watchful eye and ear on data pipelines. These practices ensure the pipeline’s health, performance, and stability. And that enables timely interventions and harmonious resolutions to potential issues. Let’s explore the significance of monitoring and alerting in a successful data pipeline performance.

Real-time Monitoring

Data engineers set up real-time monitoring systems to listen attentively to the pipeline’s heartbeat. This vigilance involves tracking various performance metrics. That includes data processing times, resource utilization, and data flow rates. Real-time monitoring allows swift detection of anomalies or deviations. And that creates a free flow of insights into the pipeline’s health.

Performance Metrics and Key Indicators

Performance metrics act as the instruments. They provide valuable cues to the pipeline’s performance. Data engineers track key indicators like data latency, throughput, error rates, and resource consumption. Using these indicators, the data pipeline conduct performance assessments periodically. These indicators monitor and offer valuable insights into the pipeline’s efficiency.

Proactive Alerting

It addresses potential issues in real-time. And the data engineers configure proactive alerting systems. These systems do timely alerts whenever predefined thresholds are breached, or anomalies are detected. Proactive alerting ensures swift problem detection. Thereby it minimizes potential disruptions.

Error and Failure Detection

Data engineers implement error and failure detection mechanisms. They can swiftly identify errors or failures by continuously monitoring data processing stages. And they enable recovery actions to prevent data processing interruptions.

Logging and Auditing

Data engineers conduct logging and auditing that meticulously records the pipeline’s activities. Logs and audit trails provide a detailed record of data flow, transformations, and processing stages. This comprehensive documentation facilitates troubleshooting and performance analysis.

Centralized Monitoring Platforms

Data engineers centralize monitoring using dedicated platforms or dashboards. These centralized stands offer a comprehensive view of the pipeline’s performance. A unified view enables data engineers to conduct analysis. And they swiftly identify potential issues across the entire pipeline.

Automated Remediation

Data engineers configure automated remediation actions. Automated remedies, like retries, task reassignment, or fallback mechanisms, create recovery from failures. And they do maintain the pipeline’s rhythm.

Scalable Monitoring Architecture

As the symphony of data processing expands, data engineers ensure a scalable monitoring architecture—the monitoring infrastructure scales to handle increasing data volumes and processing complexity.

Continuous Improvement

Data engineers continually refine the monitoring and alerting practices through periodic assessments and improvements. These refinements enhance the pipeline’s reliability and efficiency.

Monitoring and Alerting from the vigilant guardians of data pipelines. Data engineers produce data-driven insights with real-time monitoring, performance metrics, proactive alerting, error detection, logging, centralized platforms, automated remediation, scalable architecture, and continuous improvement. This approach enables swift detection of potential issues. They organize timely interventions and seamless data flow for the success of informed decisions and strategic excellence.

Real-Life Use Cases of Data Pipelines

Data pipelines play a pivotal role in various real-life use cases. They organize the flow of data to power critical applications and insights. Data pipelines perform across diverse domains. And they deliver impactful results.